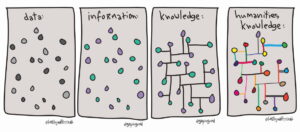

A couple of interesting but unrelated articles around the subject of humanities digital data recently appeared: a guest post in The Scholarly Kitchen by Chris Houghton on data and digital humanities, and an Aeon essay by Claire Lemercier and Clair Zalc on historical data analysis.

Houghton’s article emphasises the benefits of mass digitisation and large-scale analysis in the context of the increasing availability of digital data resources provided through digital archives and others. According to Houghton, “The more databases and sources available to the scholar, the more power they will have to ask new questions, discover previously unknown trends, or simply strengthen an argument by adding more proof.” (Houghton 2022). The challenge he highlights is that although digital archives increasingly provide access to large bodies of data, the work entailed in exploring, refining, checking, and cleaning the data for subsequent analysis can be considerable.

An academic who runs a large digital humanities research group explained to me recently, “You can spend 80 percent of your time curating and cleaning the data, and another 80 percent of your time creating exploratory tools to understand it.” … the more data sources and data formats there are, the more complex this process becomes. (Houghton 2022).

In recent years, digital access to unpublished archaeological reports (so-called ‘grey literature’) has become increasingly transformational in archaeological practice. Besides being important as a reference source for new archaeological investigations including pre-development assessments (the origin of many of the grey literature reports themselves), they also provide a resource for regional and national synthetic studies, and for automated data mining to extract information about periods of sites, locations of sites, types of evidence, and so on. Despite this, archaeological grey literature itself has not yet been closely evaluated as a resource for the creation of new archaeological knowledge. Can the data embedded within the reports (‘grey data’) be re-used in full knowledge of their origination, their strategies of recovery, the procedures applied, and the constraints experienced? Can grey data be securely repurposed, and if not, what measures need to be taken to ensure that it can be reliably reused?

In recent years, digital access to unpublished archaeological reports (so-called ‘grey literature’) has become increasingly transformational in archaeological practice. Besides being important as a reference source for new archaeological investigations including pre-development assessments (the origin of many of the grey literature reports themselves), they also provide a resource for regional and national synthetic studies, and for automated data mining to extract information about periods of sites, locations of sites, types of evidence, and so on. Despite this, archaeological grey literature itself has not yet been closely evaluated as a resource for the creation of new archaeological knowledge. Can the data embedded within the reports (‘grey data’) be re-used in full knowledge of their origination, their strategies of recovery, the procedures applied, and the constraints experienced? Can grey data be securely repurposed, and if not, what measures need to be taken to ensure that it can be reliably reused? One of the features of the world-wide COVID-19 pandemic over the past eighteen months has been the significance of the role of data and associated predictive data modelling which have governed public policy. At the same time, we have inevitably seen the spread of misinformation (as in false or inaccurate information that is believed to be true) and disinformation (information that is known to be false but is nevertheless spread deliberately), stimulating an infodemic alongside the pandemic. The ability to distinguish between information that can be trusted and information which can’t is key to managing the pandemic, and failure to do so lies behind many of the surges and waves that we have witnessed and experienced. Distinguishing between information and mis/disinformation can be difficult to do. The problem is all too often fuelled by algorithmic amplification across social media and compounded by the frequent shortage of solid, reliable, comprehensive, and unambiguous data, and leads to expert opinions being couched in cautious terms, dependent on probabilities and degrees of freedom, and frustratingly short on firm, absolute outcomes. Archaeological data is clearly not in the same league as pandemic health data, but it still suffers from conclusions drawn on often weak, always incomplete data and is consequently open to challenge, misinformation, and disinformation.

One of the features of the world-wide COVID-19 pandemic over the past eighteen months has been the significance of the role of data and associated predictive data modelling which have governed public policy. At the same time, we have inevitably seen the spread of misinformation (as in false or inaccurate information that is believed to be true) and disinformation (information that is known to be false but is nevertheless spread deliberately), stimulating an infodemic alongside the pandemic. The ability to distinguish between information that can be trusted and information which can’t is key to managing the pandemic, and failure to do so lies behind many of the surges and waves that we have witnessed and experienced. Distinguishing between information and mis/disinformation can be difficult to do. The problem is all too often fuelled by algorithmic amplification across social media and compounded by the frequent shortage of solid, reliable, comprehensive, and unambiguous data, and leads to expert opinions being couched in cautious terms, dependent on probabilities and degrees of freedom, and frustratingly short on firm, absolute outcomes. Archaeological data is clearly not in the same league as pandemic health data, but it still suffers from conclusions drawn on often weak, always incomplete data and is consequently open to challenge, misinformation, and disinformation.