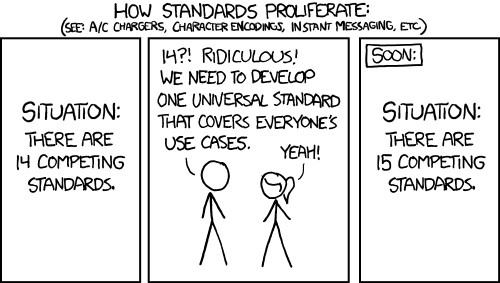

We understand knowledge construction to be social and combinatorial: we build on the knowledge of others, we create knowledge from data collected by ourselves and others, and so on. Although we pay a lot of attention to the processes behind the collection, recording, and archiving of our data, and are concerned about ensuring its findability, accessibility, interoperability, and reusability into the future, we pay much less attention to the technological mediation between ourselves and those same data. How do the search interfaces which we customarily employ in our archaeological data portals influence our use of them, and consequently affect the knowledge we create through them? How do they both enable and constrain us? And what are the implications for future interface designs?

As if to underline the lack of attention to interfaces, it’s often difficult to trace their history and development. It’s not something that infrastructure providers tend to be particularly interested in, and the Internet Archive’s Wayback Machine doesn’t capture interfaces which use dynamically scripted pages, which writes off the visual history of the first ten years or more of development of the Archaeology Data Service’s ArchSearch interface, for example. The focus is, perhaps inevitably, on maintaining the interfaces we do have and looking forward to developing the next ones, but with relatively little sense of their history. Interfaces are all too often treated as transparent, transient – almost disposable – windows on the data they provide access to.

In recent years, digital access to unpublished archaeological reports (so-called ‘grey literature’) has become increasingly transformational in archaeological practice. Besides being important as a reference source for new archaeological investigations including pre-development assessments (the origin of many of the grey literature reports themselves), they also provide a resource for regional and national synthetic studies, and for automated data mining to extract information about periods of sites, locations of sites, types of evidence, and so on. Despite this, archaeological grey literature itself has not yet been closely evaluated as a resource for the creation of new archaeological knowledge. Can the data embedded within the reports (‘grey data’) be re-used in full knowledge of their origination, their strategies of recovery, the procedures applied, and the constraints experienced? Can grey data be securely repurposed, and if not, what measures need to be taken to ensure that it can be reliably reused?

In recent years, digital access to unpublished archaeological reports (so-called ‘grey literature’) has become increasingly transformational in archaeological practice. Besides being important as a reference source for new archaeological investigations including pre-development assessments (the origin of many of the grey literature reports themselves), they also provide a resource for regional and national synthetic studies, and for automated data mining to extract information about periods of sites, locations of sites, types of evidence, and so on. Despite this, archaeological grey literature itself has not yet been closely evaluated as a resource for the creation of new archaeological knowledge. Can the data embedded within the reports (‘grey data’) be re-used in full knowledge of their origination, their strategies of recovery, the procedures applied, and the constraints experienced? Can grey data be securely repurposed, and if not, what measures need to be taken to ensure that it can be reliably reused? One of the features of the world-wide COVID-19 pandemic over the past eighteen months has been the significance of the role of data and associated predictive data modelling which have governed public policy. At the same time, we have inevitably seen the spread of misinformation (as in false or inaccurate information that is believed to be true) and disinformation (information that is known to be false but is nevertheless spread deliberately), stimulating an infodemic alongside the pandemic. The ability to distinguish between information that can be trusted and information which can’t is key to managing the pandemic, and failure to do so lies behind many of the surges and waves that we have witnessed and experienced. Distinguishing between information and mis/disinformation can be difficult to do. The problem is all too often fuelled by algorithmic amplification across social media and compounded by the frequent shortage of solid, reliable, comprehensive, and unambiguous data, and leads to expert opinions being couched in cautious terms, dependent on probabilities and degrees of freedom, and frustratingly short on firm, absolute outcomes. Archaeological data is clearly not in the same league as pandemic health data, but it still suffers from conclusions drawn on often weak, always incomplete data and is consequently open to challenge, misinformation, and disinformation.

One of the features of the world-wide COVID-19 pandemic over the past eighteen months has been the significance of the role of data and associated predictive data modelling which have governed public policy. At the same time, we have inevitably seen the spread of misinformation (as in false or inaccurate information that is believed to be true) and disinformation (information that is known to be false but is nevertheless spread deliberately), stimulating an infodemic alongside the pandemic. The ability to distinguish between information that can be trusted and information which can’t is key to managing the pandemic, and failure to do so lies behind many of the surges and waves that we have witnessed and experienced. Distinguishing between information and mis/disinformation can be difficult to do. The problem is all too often fuelled by algorithmic amplification across social media and compounded by the frequent shortage of solid, reliable, comprehensive, and unambiguous data, and leads to expert opinions being couched in cautious terms, dependent on probabilities and degrees of freedom, and frustratingly short on firm, absolute outcomes. Archaeological data is clearly not in the same league as pandemic health data, but it still suffers from conclusions drawn on often weak, always incomplete data and is consequently open to challenge, misinformation, and disinformation.