AI and its Affordances

Putting aside the hype associated with artificial intelligence (and generative AI in particular), there’s a great deal of talk surrounding the application of AI within archaeology with numerous examples of AI approaches to automated classification, feature recognition, image analysis, and so on. But much of it seems fairly uncritical – perhaps because it is largely associated with exploratory and experimental approaches seeking to find a place for AI, although there is also something of a publication/presentation bias favouring positive outcomes (e.g., see Sobotkova et al. 2024, 7). AI is typically presented as offering greater efficiencies and productivity gains, and any negative effects, where noted, can be managed out of the equation. In many respects this conforms to the broader context of AI where it is often seen as possessing an almost mythical, ideological status, sold by large organisations as the solution to everything from overflowing mailboxes to the climate crisis.

Cobb’s survey of generative AI in archaeology (Cobb 2023) focusses on key application areas where genAI may be of meaningful use: research (e.g., Spenneman 2024), writing, illustration (e.g., Magnani and Clindaniel 2023), teaching, and programming (e.g., Ciccone 2024), although Cobb sees programming as the most useful with mixed results elsewhere. However, Spenneman (2024, 3602-3) predicts that genAI will affect how cultural heritage is documented, managed, practiced, and presented, primarily through its provision of analysis and decision-making tools. Likewise, Magnani and Clindaniel (2023, 459) boldly declare genAI to be a powerful illustrative tool for depicting and interpreting the past, enabling multiple perspectives and reinterpretations, but at the same time, admit that

… archaeologists will face unique challenges posed by AI, including not only its impact on the interpretation and depiction of the past but also the myriad of ways these reorganizations trickle into labor practices and power dynamics in our own field. … As with other technological developments in the field, AI should complement and expand our ability to work rather than overturn the skillsets required to do so. (Magnani and Clindaniel 2023, 453)

There is a hint here that there may be a downside to AI to sit alongside the supposed benefits. But in archaeology, like the broader world beyond, it still seems that the focus is on positive claims with much less recognition of the potential repercussions of AI which requires the marketing hype and evangelistic techno-determinsm to be stripped away. For example, some years ago, Elish and boyd emphasised that AI had to be understood as a “socio-technical” concept, stressing the importance of the “specific social perceptions and context of development and use” (2018, 58), alongside the technical aspects which are more often given prominence. There’s an inevitability about focusing on the irresistible attractions of a technology like AI, which, as I’ve suggested previously, needs to be broken down, not in itself a straightforward task since simply reversing the focus on benefits to examine the risks and harms is not the whole answer.

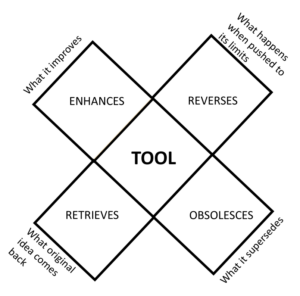

Because of this complexity, and because it can be difficult to think critically about our tools within the immediate context of their application, we need a means of developing greater critical scrutiny of our tools. To that end, I suggest McLuhan’s Laws of Media (e.g. McLuhan and McLuhan 1988) can be used as a means of better understanding our use of and relationship with artificial intelligence (see, for instance, Aguado-Terrón and Grandío-Pérez 2024; Gustafson 2024; Ott 2023). Many will be rightly sceptical about the use of the term ‘laws’ here, but these are not laws as such – McLuhan describes them as exploratory tools or ‘probes’ that provide insights into the effects of a technology; they are more heuristic device than scientific law. McLuhan summarised his approach as a tetrad: four probes which observe the impacts of artefacts upon us (Figure 1). According to McLuhan, a medium will amplify, enhance or intensify some function, obsolesce or supersede an earlier medium that used to perform a similar function, retrieve a previously obsolescent medium from the past, and when pushed far enough, developed to its full potential, will flip or reverse its original characteristics.

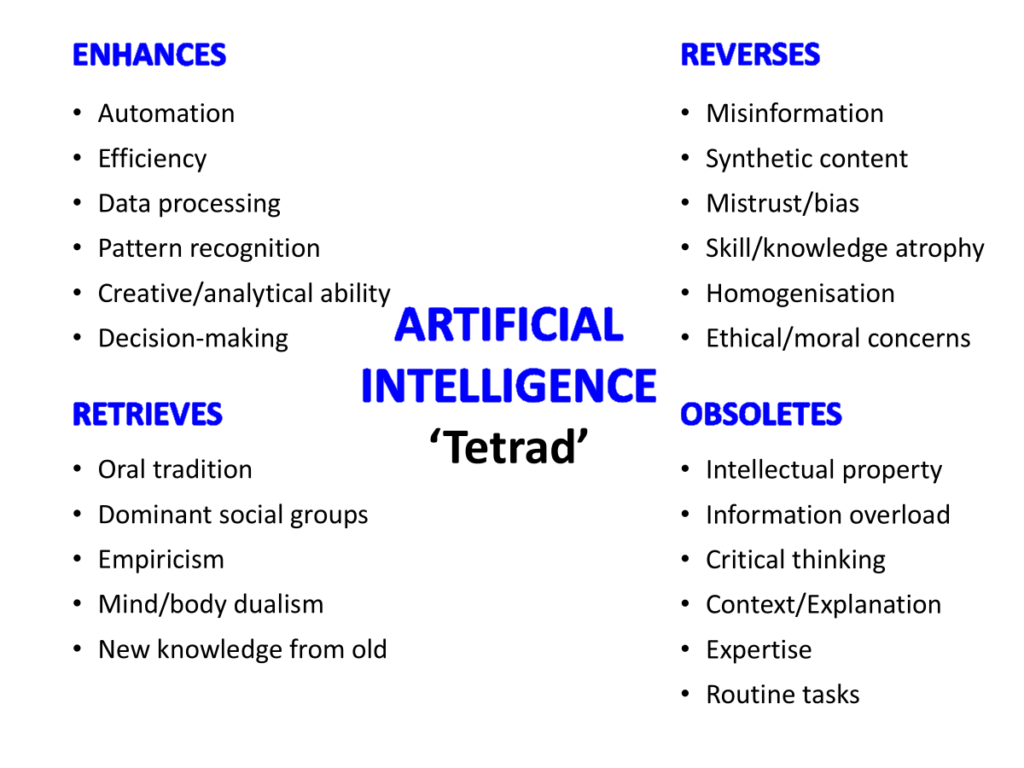

We can apply this directly to artificial intelligence tools within the context of their specific application, or (as here) at a higher scale. Figure 2 shows a series of suggestions though they are naturally incomplete and open to debate. The Enhancement section – given what was said at the outset here – almost writes itself. Elsewhere, we can see AI as resurrecting oral traditions (conversational AI, communicating knowledge through recitation etc), empowering certain groups (AI experts, prompt engineers, etc.), mind/body dualism (the computer as an extension of the human mind), and so on. At the same time, AI can be seen as superseding or obsoleting intellectual property, critical thinking, expertise, etc. AI also reverses and brings to the fore misinformation and mistrust, synthetic content (AI noise), atrophy of skills and knowledge (e.g. lost problem-solving skills), the homogenisation of thought and expression, as well as a host of ethical/moral concerns.

These ‘laws’ go beyond straightforward affordances and disaffordances and beyond the four probes themselves in that there are also a series of inherent oppositions between the probes: between retrieval and obsolescence, between enhancement and reversal (the flip), between retrieval and enhancement, and between obsolescence and reversal. So, for instance, we can set automation and efficiency against misinformation and bias, empiricism against creative and analytical abilities, critical thinking against homogenisation, intellectual property against ethical concerns, and so on. In combination they provide a more complex and nuanced perspective, certainly more than a straightforward binary divide between affordances and disaffordances. In the process they also enable us to better perceive and influence the transformations of new technologies.

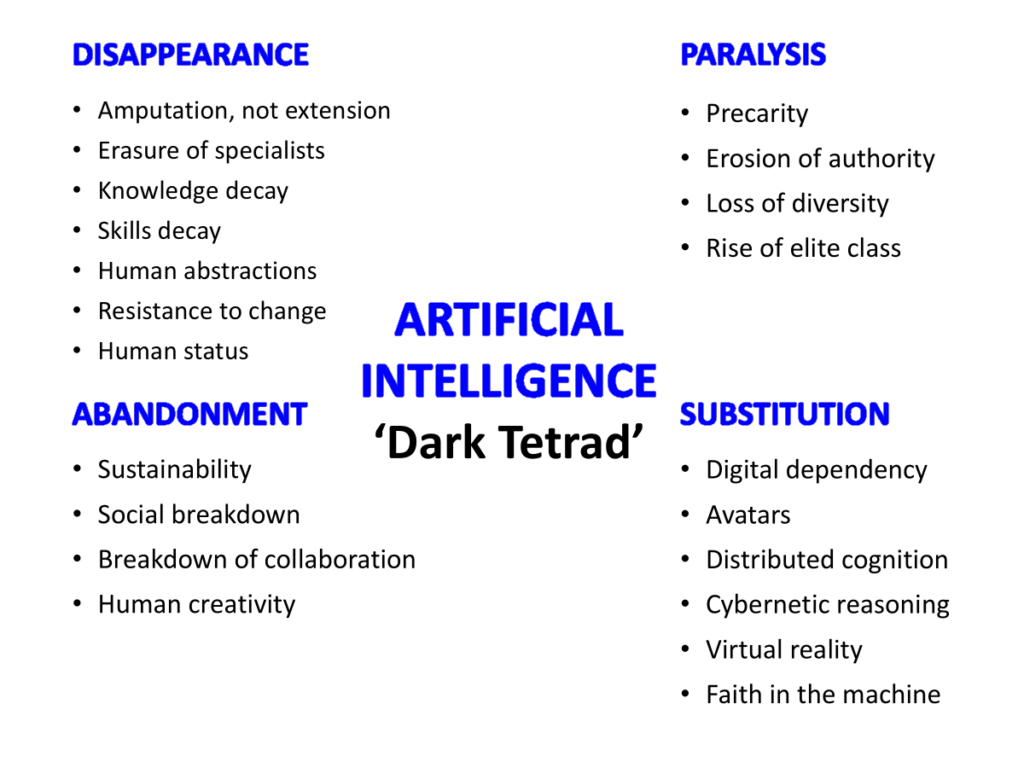

And we can take this further: Kroker (2014) suggested that McLuhan’s tetrad can be inverted: that there is a mirror image, what he calls the ‘dark tetrad’. He argued that this applies at the point where technology exceeds the laws of media and recognises that every technological possibility has an equal number of exclusions and prohibitions (2014, 25). In this light, he saw that every enhancement has an equivalent disappearance, every retrieval involves an abandonment, every obsolescence has a balancing substitution, and every reversal becomes inertia, stasis or paralysis. (2014, 25; 177ff). The dark tetrad asks not only what has been achieved by the laws of media and digital progress, but what has been obscured in the process (2014, 179). Furthermore, he suggested that when we recognise this, a spirit of rebellion may be raised amongst us – a resistance to the inevitability of technological power (2014, 152). We can see how his dark tetrad might be applied in the case of artificial intelligence (Figure 3).

This just illustrates the potential – other examples are possible! – but it serves as an alternative perspective to that seen with McLuhan’s tetrad. Like McLuhan’s tetrad, it incorporates its own internal oppositions – between abandonment and substitution, between disappearance and inertia, between abandonment and disappearance, and between substitution and inertia. In combination, these again provide added nuance to the picture. Furthermore, in the light of his dark tetrad, Kroker suggests that we might ask

… that technology itself be rendered problematic in detail and definition. Rendered problematic, that is, by the practice of subtle imagination, depth perception, complex human intelligence – in short, by the posthuman imagination. (Kroker 2014,194)

In other words, a considered problematisation of artificial intelligence through the application of our perception, understanding, and imagination provides the means of not simply addressing the outcomes of the technologies, but the opportunity to resist and reshape them appropriately.

Fundamentally, understanding the constellation of affordances and disaffordances – not simply the practical or technical ones but those exposed by the probes of the tetrads – enables us to be clearer about what we might exploit and at the same time need to resist when it comes to these technologies. It also encourages us to take more informed decisions and consider the range of implications of their use, rather than just focusing on whether a technical objective may or may not be achieved. Considering AI in these terms enables us to approach them knowledgeably, rather than just drifting along with the technical flow, and supports us in resisting external pressures and expectations.

[This post is based on part of a keynote presentation given to the Archaeo-Informatics 2024 ‘Use and Challenges of AI in Archaeology’ conference. My thanks to Lutgarde Vandeput and Nurdan Atalan Çayırezmez and colleagues for the invitation to speak.]

References

Aguado-Terrón, J. M., & Grandío-Pérez, M. del M. (2024). Toward a Media Ecology of GenAI: Creative Work in the Era of Automation. Palabra Clave 27(1), e2718. https://doi.org/10.5294/pacla.2024.27.1.8

Cobb P. J. (2023). Large Language Models and Generative AI, Oh My!: Archaeology in the Time of ChatGPT, Midjourney, and Beyond. Advances in Archaeological Practice 11(3):363-369. https://doi.org/10.1017/aap.2023.20

Ciccone, G. (2024). ChatGPT as a Digital Assistant for Archaeology: Insights from the Smart Anomaly Detection Assistant Development. Heritage 7(10), Article 10. https://doi.org/10.3390/heritage7100256

Elish, M. C., & boyd, danah. (2018). Situating methods in the magic of Big Data and AI. Communication Monographs 85(1), 57–80. https://doi.org/10.1080/03637751.2017.1375130

Gustafson, E. (2024). Probing the limits of Figures and Grounds: Artificial Intelligence and Quantum Computation. New Explorations 4(1). https://doi.org/10.7202/1111636ar

Kroker, A. (2014). Exits to the posthuman future. Polity Press.

Magnani, M., & Clindaniel, J. (2023). Artificial Intelligence and Archaeological Illustration. Advances in Archaeological Practice 11(4), 452–460. https://doi.org/10.1017/aap.2023.25

McLuhan, M., & McLuhan, E. (1988). Laws of media: The new science. Univ. of Toronto Press.

Ott, B. L. (2023). The Digital Mind: How Computers (Re)Structure Human Consciousness. Philosophies 8(1), 4. https://doi.org/10.3390/philosophies8010004

Sobotkova, A., Kristensen-McLachlan, R. D., Mallon, O., & Ross, S. A. (2024). Validating predictions of burial mounds with field data: The promise and reality of machine learning. Journal of Documentation (ahead-of-print). https://doi.org/10.1108/JD-05-2022-0096

Spennemann, D. H. R. (2024). Generative Artificial Intelligence, Human Agency and the Future of Cultural Heritage. Heritage 7, 3597–3609. https://doi.org/10.3390/heritage7070170